The cublasStrsm() function allow us to solve linear systems in parallel but only when triangular matrix are involved.

For the general case, we need to perform a LU decomposition of the matrix beforehand.

The Blocked LU decomposition works like standard LU decomposition. But instead of applying the algorithm to digit, each step is performed onto block matrices.

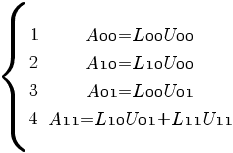

Then lets start with the LU decomposition of a sample matrix before introducing the Blocked LU decomposition algorithm.Standard LU decomposition :

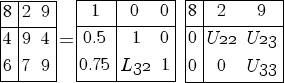

![(1) delim {[} {matrix{3}{3}{8 2 9 4 9 4 6 7 9}} {]} = delim {[}{matrix{3}{3}{1 0 0 {L21} 1 0 {L31}{L32} 1}}{]} delim {[}{matrix{3}{3}{{U11} {U12} {U13} 0 {U22} {U23} 0 0 {U33}}}{]} (1) delim {[} {matrix{3}{3}{8 2 9 4 9 4 6 7 9}} {]} = delim {[}{matrix{3}{3}{1 0 0 {L21} 1 0 {L31}{L32} 1}}{]} delim {[}{matrix{3}{3}{{U11} {U12} {U13} 0 {U22} {U23} 0 0 {U33}}}{]}](./LU Matrix Decomposition in parallel with CUDA _ Noctua_files/math_956.5_835c7056760a8d1c76d83da364c5e584.png) From that we can compute easily the first Row and the first column of L and U :

From that we can compute easily the first Row and the first column of L and U :![8=delim {[} {matrix {1}{3}{1 0 0 }} {]} delim {[} {matrix {1}{3}{{U11} 0 0}}{]}^T doubleleftright {U11}=8 8=delim {[} {matrix {1}{3}{1 0 0 }} {]} delim {[} {matrix {1}{3}{{U11} 0 0}}{]}^T doubleleftright {U11}=8](./LU Matrix Decomposition in parallel with CUDA _ Noctua_files/math_986_ee8ab0eb51943c3c314a6dc0943aec71.png)

![2=delim {[} {matrix {1}{3}{1 0 0 }} {]} delim {[} {matrix {1}{3}{{U12} {U22} 0}}{]}^T doubleleftright {U12}=2 2=delim {[} {matrix {1}{3}{1 0 0 }} {]} delim {[} {matrix {1}{3}{{U12} {U22} 0}}{]}^T doubleleftright {U12}=2](./LU Matrix Decomposition in parallel with CUDA _ Noctua_files/math_986_6ce203fc132b6354332b4c54a68e6f08.png)

![9=delim {[} {matrix {1}{3}{1 0 0 }} {]} delim {[} {matrix {1}{3}{{U13} {U23} {U33}}}{]}^T doubleleftright {U13}=9 9=delim {[} {matrix {1}{3}{1 0 0 }} {]} delim {[} {matrix {1}{3}{{U13} {U23} {U33}}}{]}^T doubleleftright {U13}=9](./LU Matrix Decomposition in parallel with CUDA _ Noctua_files/math_986_e24ca51a1f569ccfa8642520baca1e67.png)

![4=delim {[} {matrix {1}{3}{{L21} 1 0 }} {]} delim {[} {matrix {1}{3}{{U11} 0 0}}{]}^T doubleleftright {L21}=4/{U11} doubleleftright {L21}=1/2 4=delim {[} {matrix {1}{3}{{L21} 1 0 }} {]} delim {[} {matrix {1}{3}{{U11} 0 0}}{]}^T doubleleftright {L21}=4/{U11} doubleleftright {L21}=1/2](./LU Matrix Decomposition in parallel with CUDA _ Noctua_files/math_981_263a99a8a898d252f587e073eef6f357.png)

![6=delim {[} {matrix {1}{3}{{L31} {L32} 0 }} {]} delim {[} {matrix {1}{3}{{U11} 0 0}}{]}^T doubleleftright {L31}=6/{U11} doubleleftright {L31}=3/4 6=delim {[} {matrix {1}{3}{{L31} {L32} 0 }} {]} delim {[} {matrix {1}{3}{{U11} 0 0}}{]}^T doubleleftright {L31}=6/{U11} doubleleftright {L31}=3/4](./LU Matrix Decomposition in parallel with CUDA _ Noctua_files/math_981_60809b9c3e18666e6f622b645609874f.png) We can now rewrite (1)

We can now rewrite (1)![(2) delim {[} {matrix{3}{3}{8 2 9 4 9 4 6 7 9}} {]} = delim {[}{matrix{3}{3}{1 0 0 0.5 1 0 0.75 {L32} 1}}{]} delim {[}{matrix{3}{3}{ 8 2 9 0 {U22} {U23} 0 0 {U33}}}{]} (2) delim {[} {matrix{3}{3}{8 2 9 4 9 4 6 7 9}} {]} = delim {[}{matrix{3}{3}{1 0 0 0.5 1 0 0.75 {L32} 1}}{]} delim {[}{matrix{3}{3}{ 8 2 9 0 {U22} {U23} 0 0 {U33}}}{]}](./LU Matrix Decomposition in parallel with CUDA _ Noctua_files/math_956.5_c43debe4adc0aad4ab0c4ffb274d2ac5.png) if we regroup terms by block :

if we regroup terms by block : if we name :

if we name : ![{A1}= delim {[} { matrix{2}{2}{9 4 7 9} } {]} {L1}= delim {[} { matrix{2}{2}{1 0 {L32} 1} } {]} {U1} = delim {[} { matrix{2}{2}{{U22} {U23} 0 {U33}} } {]} {A1}= delim {[} { matrix{2}{2}{9 4 7 9} } {]} {L1}= delim {[} { matrix{2}{2}{1 0 {L32} 1} } {]} {U1} = delim {[} { matrix{2}{2}{{U22} {U23} 0 {U33}} } {]}](./LU Matrix Decomposition in parallel with CUDA _ Noctua_files/math_971.5_44bfb265e9b971615edf005381eec61e.png) Applying the block matrix product leads to :

Applying the block matrix product leads to : ![{A1}= delim {[} { matrix{1}{2}{0.5 0.75} } {]}^t delim {[} { matrix{1}{2}{2 9} } {]}+{ L1} {U1} {A1}= delim {[} { matrix{1}{2}{0.5 0.75} } {]}^t delim {[} { matrix{1}{2}{2 9} } {]}+{ L1} {U1}](./LU Matrix Decomposition in parallel with CUDA _ Noctua_files/math_986_0b44ba2fac596d326e2a510d6bd4ecbb.png)

![doubleleftright {L1}{U1} = A1 - delim {[} { matrix{1}{2}{0.5 0.75 } } {]}^t delim {[} { matrix{1}{2}{2 9} } {]} doubleleftright {L1}{U1} = A1 - delim {[} { matrix{1}{2}{0.5 0.75 } } {]}^t delim {[} { matrix{1}{2}{2 9} } {]}](./LU Matrix Decomposition in parallel with CUDA _ Noctua_files/math_986_b8df7334d2b19a88d32c41eaf02ff583.png)

![doubleleftright {L1}{U1} = delim {[} { matrix{2}{2}{ 8 -0.5 5.5 2.25} } {]} doubleleftright {L1}{U1} = delim {[} { matrix{2}{2}{ 8 -0.5 5.5 2.25} } {]}](./LU Matrix Decomposition in parallel with CUDA _ Noctua_files/math_971.5_c9cddea68ce8f4b29fe959b590c6cac7.png) Then, to compute

Then, to compute  , we can Apply the same steps to

, we can Apply the same steps to ![delim {[} { matrix{2}{2}{ 8 -0.5 5.5 2.25} } {]} delim {[} { matrix{2}{2}{ 8 -0.5 5.5 2.25} } {]}](./LU Matrix Decomposition in parallel with CUDA _ Noctua_files/math_971.5_8a99632de6607229e6279b20e735515c.png) .If we repeat the process until

.If we repeat the process until  is size

is size  . We get the LU decomposition of AFinally :

. We get the LU decomposition of AFinally : ![delim {[} {matrix{3}{3}{8 2 9 4 9 4 6 7 9}} {]} = delim {[} { matrix{3}{3}{ 1 0 0 0.5 1 0 0.75 0.6875 1 }} {]} delim {[}{matrix{3}{3}{ 8 2 9 0 8 {-0.5} 0 0 2.59375 }}{]} delim {[} {matrix{3}{3}{8 2 9 4 9 4 6 7 9}} {]} = delim {[} { matrix{3}{3}{ 1 0 0 0.5 1 0 0.75 0.6875 1 }} {]} delim {[}{matrix{3}{3}{ 8 2 9 0 8 {-0.5} 0 0 2.59375 }}{]}](./LU Matrix Decomposition in parallel with CUDA _ Noctua_files/math_956.5_18fbf90fb1ba4a3ae76692d8bb5817d3.png) I deliberately use a sample where we never need to divide by 0 (

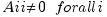

I deliberately use a sample where we never need to divide by 0 ( ). If it’s not the case a permutation matrix must be introduced to keep track of row pivoting.Block LU decomposition :

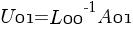

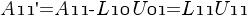

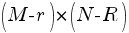

). If it’s not the case a permutation matrix must be introduced to keep track of row pivoting.Block LU decomposition :Block LU decomposition is very similar to the algorithm we just described. The only difference is that we introduce the Block matrix at the beginning of the process.We choose a sub matrix block size :

and rewrite the matrix A of size

and rewrite the matrix A of size  as a block matrix:

as a block matrix: Where

Where  is

is  .Like we did in the LU Decomposition section we can easily compute the first row and the first column of the L and U block matrices and apply the process iteratively to

.Like we did in the LU Decomposition section we can easily compute the first row and the first column of the L and U block matrices and apply the process iteratively to  As the usual rules of matrix multiplication hold with block matrices we can write

As the usual rules of matrix multiplication hold with block matrices we can write From Eq(1) and (2) We can get

From Eq(1) and (2) We can get  applying LU decomposition (cf prev section) to the matrix of size

applying LU decomposition (cf prev section) to the matrix of size  composed by

composed by  and

and  .From Eq(3) we get

.From Eq(3) we get  Finally we rearrange Eq(4) as

Finally we rearrange Eq(4) as  From this equation we see that the problem of finding

From this equation we see that the problem of finding  and

and  reduces to finding the LU factorization of the

reduces to finding the LU factorization of the  matrix

matrix  . This can be done by applying the steps outlined above to

. This can be done by applying the steps outlined above to  instead of to AFor an in-place algorithm, A is overwritten by L and U – the 1s on the diagonal of L do not need to be stored explicitly. Similarly, when A is updated by Eq. (5) this may also be done in place. After k of these K steps, the first kr columns of L and the first kr rows of U have been evaluated, and matrix A has been updated to the form shown bellow.

instead of to AFor an in-place algorithm, A is overwritten by L and U – the 1s on the diagonal of L do not need to be stored explicitly. Similarly, when A is updated by Eq. (5) this may also be done in place. After k of these K steps, the first kr columns of L and the first kr rows of U have been evaluated, and matrix A has been updated to the form shown bellow. Implementation :As stressed in the previous section, we first need to implement the standard LU decomposition.

Implementation :As stressed in the previous section, we first need to implement the standard LU decomposition.

- //***************************************************************************************

- //void DecomposeLU( int M, int N, int lda , float* A,

- // int* permute, float epsilon, InfoStat& stat)

- //

- // M : Num of rows of A

- // N : Num of column of A

- // A : Float Matrix of size M*N

- // on the output contains the result of the LU decomposition

- // The diagonal elements for L are not stored in A ( assuming they are all 1)

- //lda : Leading dim of A lda < std::max(1,M)

- //P : Permutation vector of size M

- //epsilon : Epsilon (used to test for singularities)

- //stat : return status

- // **************************************************************************************

- void DecomposeLU(int M, int N, int lda , float* A, int* P, float epsilon, InfoStat& stat)

- {

- cublasStatus cuStat;

- //Preconditions

- if ( M<=0 || N<=0 || lda < std::max(1,M) )

- {

- stat._info = -1;

- if (M<=0)

- stat._str = "M<=0";

- if (N<=0)

- stat._str = "M<=0";

- if (lda < std::max(1,M))

- stat._str = "lda < std::max(1,M)";

- return;

- }

- int minDim = std::min( M, N );

- for (int k=0; k<minDim-1; k++)

- {

- int pivotRow = k-1+cublasIsamax(M-k,A+k + k*lda, 1); // row relative to the current submatrix

- int kp1 = k+1;

- P[k] = pivotRow;

- if (pivotRow!=k)

- {

- cublasSswap(N, A+pivotRow, lda, A+k, lda);

- }

- float valcheck;

- cublasGetVector(1,sizeof(float),A+k+ k*lda, 1, &valcheck, 1);

- if (fabs(valcheck) < epsilon)

- {

- stat._info =k+1;

- stat._str = " Matrix is Singular ";

- return;

- }

- if (kp1 < M)

- {

- cublasSscal(M-kp1, 1.0f/valcheck,A+kp1+ k*lda, 1);

- }

- if ( kp1 < minDim )

- {

- cublasSger (M-kp1, N-kp1, -1.0f,A+kp1+ k*lda, 1, A+k+ kp1*lda, lda,A+ kp1*lda+kp1, lda);

- }

- }

- CHECK_CUBLAS("decomposeLU pb");

- }

As a further improvement, it would be nice to avoid the cublasGetVector() call. One solution would be to write this function as a kernel instead of using Cublas. Any other idea is welcome !

We can now use DecomposeLU() while performing the block decomposition :- //***************************************************************************************

- //void DecomposeBlockedLU ( int M, int N,int lda,

- // float *A,

- // int* P, int blockSize,float epsilon, InfoStat &stat )

- //

- // M : Num of rows of A

- // N : Num of column of A

- // A : Float Matrix of size M*N

- // on the output contains the result of the LU decomposition

- // The diagonal elements for L are not stored in A ( assuming they are all 1)

- //lda : Leading dim of A lda < std::max(1,M) //P : Permutation vector of size M //blockSize : Size of the submatrices // if blockSize>=M || blockSize==1 unblocked decomposition is called

- //epsilon : Epsilon (used to test for singularities)

- //stat : return status

- // **************************************************************************************

- void DecomposeBlockedLU ( int M, int N,int lda,

- float *A,

- int* P, int blockSize,float epsilon, InfoStat &stat )

- {

- cublasStatus cuStat;

- //Preconditions

- if (M < 0 || N < 0 || lda < std::max(1,M) )

- {

- stat._info = -1;

- if (M<=0)

- stat._str = "M<=0";

- if (N<=0)

- stat._str = "M<=0";

- if (lda < std::max(1,M))

- stat._str = "lda < std::max(1,M)"; return; } int minSize = std::min(M,N); if ( blockSize > minSize || blockSize == 1)

- {

- //straight LU decomposition

- DecomposeLU( M, N, lda, A, P, epsilon, stat);

- }

- else

- {

- //blocked decomposition

- for (int i =0; i< minSize ; i+=blockSize)

- {

- int realBlockSize = std::min(minSize - i, blockSize);

- //decompose the current rectangular block

- DecomposeLU( M-i, realBlockSize, lda, A+i+i*lda, P+i, epsilon, stat);

- //adjust pivot infos

- //Todo : write a kernel for that

- for (int p = i; p< std::min( M, i+realBlockSize)-1; p++)

- {

- P[p] = P[p]+i;

- if (P[p] != p)

- {

- // Apply interchanges to columns 0:i.

- cublasSswap(i, A+p , lda, A+ P[p], lda);

- // Apply interchanges to columns i+blockSize:N.

- cublasSswap(N-i-realBlockSize, A+p+(i+realBlockSize)*lda , lda, A+ P[p]+(i+realBlockSize)*lda, lda);

- }

- }

- // Compute block row of U.

- cublasStrsm( 'l','l','n','u', realBlockSize, N-i-realBlockSize, 1.0f,

- A +i +i*lda, lda, A +i + (i+realBlockSize)*lda, lda);

- CHECK_CUBLAS("decomposeBlockedLU cublasStrsm");

- if (i+realBlockSize < M)

- {

- cublasSgemm('n','n', M-i-realBlockSize, N-i-realBlockSize, realBlockSize,

- -1.0f,

- A+i+realBlockSize+i*lda,lda,

- A+i+(realBlockSize+i)*lda,lda,

- 1.0f,

- A+i+realBlockSize+(realBlockSize+i)*lda,lda );

- CHECK_CUBLAS("decomposeBlockedLU cublasSgemm");

- }

- }

- }

- }

Performances improvement :

For a random 10 000*10 000 matrix DecomposeBlockedLU run in about 3 second on my Quadro FX 4800 versus 98 second if we use DecomposeLU alone.This code is used as a basis for Radial Basis Function interpolation computed on the GPU. I’m still eager to get better performances. I’m considering writing some part of it as a Cuda kernel. For any suggestion or if you know any alternative implementation available, thanks to drop me a mail or a comment.

Hello,

Thanks for this code.

Under which license is it released ?

thanks

S

Hi Sylvestre

This code is released under BSD license.

Cheers.

Hello,

I need to use a function like this in order to solve a linear system problem with matrix dimension that don’t fill in the device memory.

Do you think that are possible use the same approach to do this task?

Have you some suggestions for me?

Thank a lot in advance for your help

Best regards

Andrea

Hi Andrea,

Yes, I think it would not be too difficult to adapt the code to an hybrid GPU/CPU solution.

Start with a CPU Blocked LU (choosing a block size that fills in the Device memory) then solve each block using the GPU blocked LU as shown in this post.

You can implement a CPU Blocked LU implementation using LAPACK .

For this implementation, instead of calling DecomposeLU() the CPU Blocked LU will call the GPU blocked LU.

Cheers,

Hi,

First, thanks for your article – it’s really clear and helpful.

I think i’ve found a small mistake in the code, though : in DecomposeBlockedLU(), line 67, shouldn’t the first dimension argument be “M-i-realBlockSize” (and not “N-i-realBlockSize” which appears twice) ?

# cublasSgemm(‘n’,’n’, M-i-realBlockSize, N-i-realBlockSize, realBlockSize,

Best regards,

Clément

Hi Clement,

Thanks for your feedback.

You are perfectly right, it is # cublasSgemm(‘n’,’n’, M-i-realBlockSize, N-i-realBlockSize, realBlockSize

I updated the article.

Cheers.

F.

Hi,

another small question about the pivoting: in the code, it doesn’t seem to me that P[minDim] is ever set – is that normal?

It’s up to the client code to initialize the vector with a sequence (0,1,2 … ,M-1)

OK. Anyway, unless i’m mistaken, using the resulting P as a permutation matrix does not require to ever consider the last component (swapping iteratively the rows, starting at 0 and to M-2, should put the M rows to their “correct” position) – or am i mistaken?

It sounds good, if B is a “colum” device vector of size M, you can do :

for (int i=0;i<M-1;i++)

if(i != P[i] ) cublasSswap(1,B+i,1,B+P[i],1);

I discovered your blog site on google and check a few of your early posts. Continue to keep up the very good operate. Seeking forward to reading more from you later…